Nine months after the spectacular public launch of ChatGPT3, the one question nearly every marketing leader has been asked is “So, what are we doing with AI?” The real answer is that marketers have been using different forms of AI for years. It’s just been doing boring, behind-the-scenes work like data analysis, lead scoring, media optimization, and predictive analytics – exactly the sort of places that the typical board member or college president wouldn’t readily notice.

More often than not, what our curious colleagues are really asking about isn’t AI broadly, but generative AI, specifically – the popular high school quarterback of AI that made headlines by churning out vast quantities of competently average text, fake images of the Pope in a puffer jacket, and convincing impersonations of Drake and The Weeknd in seconds.

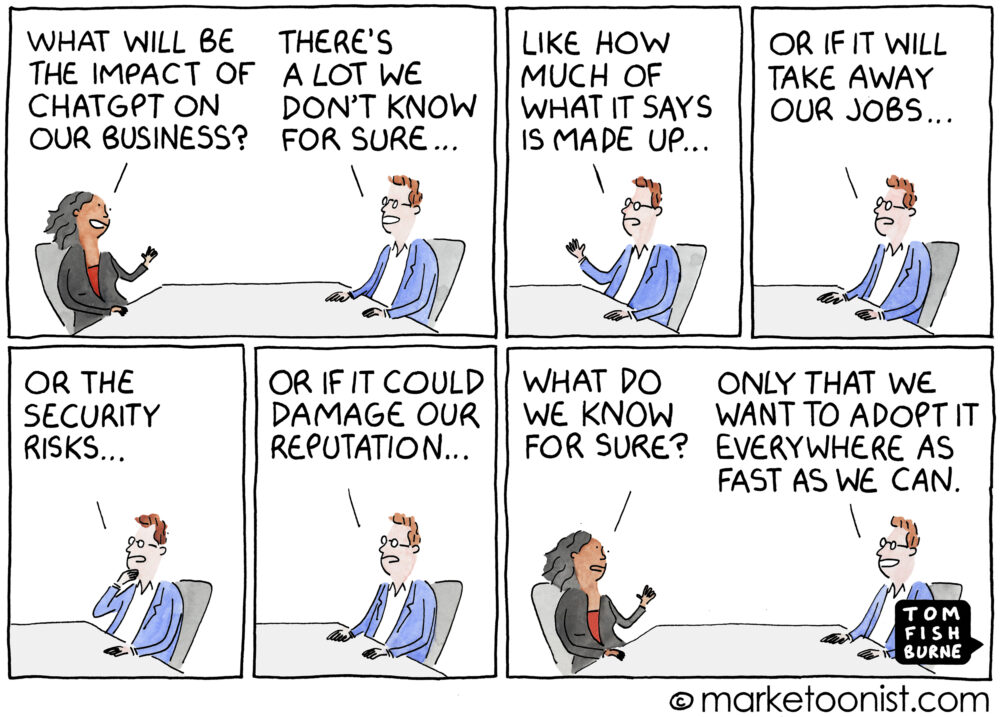

No one’s quite sure what generative AI really means for their organization, but based on a highly scientific review of my LinkedIn feed, the consensus among most thought leader/agency types is we all need whatever it is right now.

For the record, I’m not a complete AI grump. I’m sure generative AI will change aspects of marketing, creative services, and the larger world of work. And, as forward-thinking marketers, I do believe it’s in our best interests to understand these tools and the value they can provide.

I also believe where these tools are taking us is largely unpredictable and not entirely risk-free. Setting aside the world-ending existential threats that may or may not come with AI, there are plenty of mundane legal, ethical, and operational risks that definitely do exist for the average marketer looking to experiment with generative AI in their day-to-day work.

Against this backdrop, I’ve developed six guiding principles that I hope will help marketers get the most value out of generative AI with the least risk. These principles aren’t just an academic exercise. We’ve rolled them out to our team here at the agency and expect everyone to follow them as we explore ways these tools can improve how we work.

Six Guiding Principles for Using Generative AI Responsibly in Marketing

Human Accountability

AI software is just that–software. In the same way we’re on the hook for responsibly using spreadsheets, we’re also on the hook for responsibly using generative AI. There can be no finger-pointing back at the AI if something goes wrong. We must take ultimate responsibility for if, how, and when we use AI tools in our work.

Protecting Data Privacy and Security

As marketers, our work frequently gives us privileged access to sensitive information like long-term strategies, contact records, proprietary research, and other secret sauce. We must ensure that any data used for training or operating AI tools is handled appropriately with respect to privacy laws, ethical guidelines, confidentiality, and information security.

Even seemingly benign uses of these tools can pose a risk when we don’t understand the privacy and security implications of the systems we’re using. Case in point, Samsung employees unintentionally leaked sensitive information when they used ChatGPT to optimize confidential source code and summarize meeting transcripts. That information is now part of ChatGPT’s training data and could potentially be used to “improve” ChatGPT’s responses to other users.

As a rule, unless the tool in question has been thoroughly vetted, we should assume anything we enter into it will become publicly available.

Respect for Intellectual Property

Generative AI tools present a number of emerging issues with regard to intellectual property rights. For instance, when generative AI systems are trained on protected works, there’s a very real risk that AI-generated outputs could inadvertently infringe on someone else’s intellectual property rights. Additionally, the US Copyright Office has only provided limited guidance on the extent to which AI-generated work can be copyrighted. Currently, the USCO has declared that work generated by AI using only a text description or prompt from a human user cannot be copyrighted, while work that was created by AI and then modified by a human is more likely to be copyrightable. With these issues in mind, it’s every marketer’s job to establish processes and safeguards to protect your organization and the rights of others.

Quality Control

AI isn’t a magical black box that just does things right. Just like humans, AI tools are capable of mistakes, inaccuracies, omissions, and outright fabrication. We can never assume AI-generated content is ready to be published without a thorough human review to confirm the trustworthiness of the information it contains.

Respect for the End User

Our world is flooded with mediocre content and experiences, and generative AI tools will most likely accelerate this problem. It’s no accident that during the last half of 2022, Google announced multiple updates to its search algorithm and Search Quality Rater Guidelines to focus on high-quality, human-created content.

Google’s updated guidelines explicitly focus on “the extent to which a human being actively worked to create satisfying content” and “the extent to which the content offers unique, original content that is not available on any other websites.” This should give pause to anyone hoping to use large language model tools like ChatGPT to churn out content quickly. As creative professionals, it’s our job to hold a high standard and add uniquely human value to the work we create. If we’re not doing that, we’re only adding to the noise.

Avoiding Bias

While AI tools may offer the promise of objectivity, in reality, they reflect the shortcomings of the data they were trained on. Amazon found this out when they trained an algorithm on 10 years worth of hiring data in order to develop a tool that could analyze resumes and suggest the best hires. The result? Engineers found their new tool was excellent at perpetuating the hiring practices of the previous decade by identifying and preferentially selecting male candidates.

An analysis of Stable Diffusion–the AI tool that powers image generation features for companies like Canva and Adobe–found the tool frequently amplified gender and racial stereotypes. For instance, the AI drastically underrepresented women when tasked with creating images of high-paying jobs like judges or doctors. When tasked with creating images for keywords like “fast food worker” or “inmate,” the outputs were heavily skewed towards darker skin tones, even though 70% of fast food workers and 50% of inmates are white.

These examples and others should alert us to the often subtle biases present in AI tools. It’s up to us to critically evaluate the outputs of AI tools and guard against amplifying biases.

Engineering a Responsible AI Revolution

The underlying notion behind each of these guiding principles is the firm belief that humans have to remain in the driver’s seat when it comes to AI. At least as of June 2023, AI doesn’t have judgment, first-hand experience, or delightfully whimsical thoughts. It’s not wise, insightful, ethical, or omniscient. And it’s certainly not any more inevitable than any other piece of software we use.

As creative professionals, it’s our job to decide if, how, and when to use AI in our work. After all, whether generative AI ends up being a Pandora’s Box, a Horn of Plenty, or something else altogether, is entirely up to us.